New HPC resources at the UPV/EHU to serve research

The Scientific Computing Service of the UPV/EHU(IZO-SGI) has released the new computing resources of the cluster Arina. This cluster offers High Performance Computing services to the scientific community of the university as well as to enterprises and other public institutions. With this renewal, the service continues offering cutting edge resources to the researchers to maintain and improve the technical-scientific production and international competitiveness.

The Scientific Computing Service of the UPV/EHU(IZO-SGI) has released the new computing resources of the cluster Arina. This cluster offers High Performance Computing services to the scientific community of the university as well as to enterprises and other public institutions. With this renewal, the service continues offering cutting edge resources to the researchers to maintain and improve the technical-scientific production and international competitiveness.

The extension of the cluster tries to cover the needs that have been detected in several aspects of the computation. We emphasize the following:

- A high RAM memory system(512 GB) oriented to genomic sequencing, but also with applicability to other areas.

- A node with 2 Nvidia Kepler K20 GPUs for any kind of applications that use these increasingly computing system.

- A high capacity (40 TB) and high performance file system with ~6 GB/s input/output bandwidth. I.e, it can store the capacity of 60.000 CDs and can read or write in just one second the equivalent to 9. This filesystem is not for static data but is used for temporary storage of the calculations. The genetic applications, Quantum Physics and Chemistry, big data, etc will take advantage of this system.

- All the elements are connected with a high bandwidth (56 Gb/s) and low latency network, equivalent to 560 domestic connections at 100 Mb/s. The filesystem is also connected through this network.

- Finally, of course, computing power based on last generation of Intel Xeon Ivy-bridge processors, with quite a lot RAM memory (128 GB) and solid stated hard disk, much faster than traditional ones.

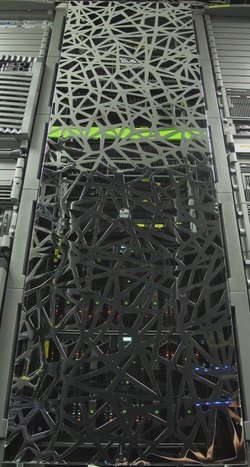

The new resources have been integrated in the Arina cluster and we have call it Katramila, messy in basque, because of the particular door of the rack, as you can see in the photograph, and because the integration of it has been messy.

Below you can found a more detailed description of the equipment:

- 32 BullX nodes with 662 cores oriented to processing with the following characteristics:

- 30 blade nodes with 20 cores in 2 Xeon 2680v2 processors at 2.8 GHz, 128 GB RAM at 1867 MHz and a 128 GB solid state hard disk.

- 1 node with 2 Nvidia Kepler K20 GPUs with 5 GB RAM, 20 cores in 2 Xeon 2680v2 processors at 2.8 GHz, 128 GB RAM at 1867 MHz and a 128 GB solid state hard disk.

- 1 node with 512 GB of RAM at 1600 MHz, 32 cores in 4 Xeon 4620 v2 processors at 2.6 GHz and 1 TB of hard disk.

- 1 high performance file system in high availability based in Lustre and build with 4 BullX servers and 5 E2700 Netapp storage systems with 40 TB of capacity, 6.7 GB/s write speed and 5.1 GB/s read speed.

- A FDR infiniband network with low latency and high bandwidth (56 Gb/s) connecting all the elements.

- A BullX administration node with 2 Xeon 2650v2 processors with 8 cores each at 2.6 GHz, 128 GB of RAM at 1867 GHz and 3 TB of disk in mirror configuration.

- Other elements like rack, service network, administration tool, etc.

Comentarios